Ryan Skraba

Everything a Data Engineer needs to know

A File Format? A data format?

A data model?

A code generator?

A serializer for my existing code?

"Apache Avro™ is the leading serialization format for record data, and first choice for streaming data pipelines."

The Binary Serialization Story

{

"type" : "record",

"name" : "Sensor",

"namespace" : "iot",

"fields" : [

{"name" : "id", "type" : "string"},

{"name" : "start_ms", "type" : "long"},

{"name" : "defects", "type" : "int"},

{"name" : "deviation", "type" : "float"},

{"name" : "subsensors",

"type" : {"type" : "array",

"items" : "Sensor"}}

]

}Gotcha: Determinism

Q: Does serialize(42) always equal serialize(42)?

A: Usually, but…

⚠️ Attention when using serialized bytes as keys in big data!

Gotcha: Precision

An 8-byte IEEE-754 floating point number has nearly 16 decimal digits of precision

An 8-byte LONG integer has nearly 19

Usually not a problem, except… JSON!

{

"type" : "record",

"name" : "Sensor",

"namespace" : "iot",

"fields" : [

{"name" : "id", "type" : "string"},

{"name" : "start_ms", "type" : "long"},

{"name" : "defects", "type" : "int"},

{"name" : "deviation",

"type" : ["null", "float"]},

{"name" : "subsensors",

"type" : {"type" : "array",

"items" : "Sensor"}}

]

}Gotcha: Meditation on the nature of nothing

Q: How many zero byte datum (NULL) can you read from a zero byte buffer?

A: … a bit more than infinity.

# Eight primitives

"null"

"boolean"

"int", "long"

"float", "double"

"bytes"

"string"

# Two collections

{"type", "array", "item": "iot.Sensor"}

{"type", "map", "item": "iot.Sensor"}

# Three named

{"type", "fixed", "name": "F2",

"size": 2}

{"type", "enum", "name": "E2",

"symbols": ["Z", "Y", "X", "W"]}

{"type", "record", "name": "iot.Sensor" ...}

# Plus unionBYTES

FIXED

⚠️ Don’t use MAPs in partition keys

# Unqualified name

# Null or default namespace

{"type":"fixed","size":2,

"name":"FX"}

# Qualified with a namespace

{"type":"fixed","size":3,

"name":"size3.FX"}

{"type":"fixed","size":3

"namespace":"size3",

"name":"FX"}

{"type":"fixed","size":4,

"name":"size4.FX"}Namespaces are inherited inside a RECORD

{"type", "record",

"name": "Record",

"namespace": "size4", ⚠️(inherited)

"fields": [

{"name": "field0", "type": "size3.FX"}

{"name": "field1", "type": "FX"} 🔴

]

}⚠️ Best practice: use a namespace!

# Sometimes works

{"type":"fixed","size":12,

"name":"utf.Durée"}

# Better

{"type":"fixed","size":12,

"name":"utf.Dur",

"i18n.fr_FR": "Durée"}Avro names are never found in serialized data!

[_a-zA-Z][_a-zA-Z0-9]

⚠️ Best practice: Follow the spec here.

{

"type" : "record"

"name" : "Sensor",

"namespace" : "iot",

"fields" : [

{"name" : "id", "type" : "string"},

{"name" : "start_ms", "type" : "long"},

{"name" : "defects", "type" : "int"},

{"name" : "deviation", "type" : "float"},

{"name" : "temp",

"type" : ["null", "double"],

"aliases": ["tmep", "temperature"],

"default": null},

{"name" : "subsensors",

"type" : {"type" : "array",

"items" : "Sensor"}}

]

}Some attributes don’t take part in simple serialization.

Except when they do…

⚠️ Best practice: Check your SDK

Gotcha: (Java-only) CharSequence or String, ByteBuffer or byte[]?

By default, Avro reads STRING into Utf8 instances

{"type": "string", "avro.java.string": "String"}Avro reads BYTES into ByteBuffer instances.

Be kind, rewind()

Gotcha: Embedding a schema in a schema

Succinct

{"type":"array","items":"long"}Verbose

{"type":"array","items":{"type":"long"}}Nope

{"type":"array","items":{"type":{"type":"long"}}}Gotcha: {"type": {"type": … }}

{

"type" : "record",

"name" : "ns1.Simple",

"fields" : [ {

"name" : "id",

"type" : {"type" : "long"},

}, {

"name" : "name",

"type" : "string"

} ]

}Conceptually fieldType and fieldName

Important when adding arbitrary properties!

Primitives use the simple form.

Remove all unnecessary attributes and all user properties.

Use full names, remove all namespace

List attributes in a specific order.

Normalize JSON strings, replace any \uXXXX by UTF-8.

Remove quotes and zero padding in numbers.

Remove unnecessary whitespace.

{

"type" : "record"

"name" : "Sensor",

"namespace" : "iot",

"fields" : [

{"name" : "id", "type" : "string"},

{"name" : "start_ms", "type" : "long"},

{"name" : "defects", "type" : "int"},

{"name" : "deviation", "type" : "float"},

{"name" : "temp",

"type" : ["null", "double"],

"aliases": ["tmep", "temperature"],

"default": null},

{"name" : "subsensors",

"type" : {"type" : "array",

"items" : "Sensor"}}

]

}**{"name":"iot.Sensor","type":"record","fields":[

{"name":"id","type":"string"},{"name":"start_ms

","type":"long"},{"name":"defects","type":"int"

},{"name":"deviation","type":"float"},{"name":"

temp","type":["null","double"]},{"name":"subsen

sors","type":{"type":"array","items":"iot.Senso

r"}}]}**64bit Fingerprint: 5614D05749C8743

Deep dive into HOW, which answers a couple of WHY:

Why isn’t there a SHORT type? A UINT?

The basic Avro data model and how to write a schema

Tagless, streamlined binary encoding

Using Avro

API V1 (aka Actual) (aka Writer)

API V2 (aka Expected) (aka Reader)

Every Sensor V2 temperature field will be read with NULL

Store and order the schemas for a subject, and enforces compatibility guarantees when introducing a new schema.

BACKWARDS: V4 ➜ V5 guaranteed; consumers update first

FORWARDS: V5 ➜ V4 guaranteed; producers update first

FULL: V4 ⬌ V5 guaranteed

Suffix _TRANSITIVE to apply to ALL older schemas

API V1 (aka Actual) (aka Writer)

API V3 Drop a field

API V4 Reorder fields

⚠️This one trick drives schema registries crazy!

Avro binary data never has names in it.

Don’t use a schema pair 😱

Stick the new name in the same position in the "writer" schema.

Let us never speak of this again.

Primitives can be widened / promoted:

INT ➜ to LONG, FLOAT, or DOUBLE

LONG ➜ to FLOAT, or DOUBLE

FLOAT ➜ to DOUBLE

STRING ⬌ BYTES are interchangeable

⚠️ LONG to DOUBLE loses precision but you’re asking for it

if both array ➜ the item type matches

if both map ➜ the value type matches

if both fixed ➜ the size is the same

if named ➜ the unqualified name is the same

if both enum ➜ symbols are resolved by name

if unknown enum symobl, use default

otherwise an error at deserialization time

Making a field optional:

LONG ➜ to UNION<NULL,LONG>

Making a field required: ⚠️

UNION<NULL,LONG> ➜ to LONG

Adding a message payload:

UNION<A,B,C,D,E> ➜ to UNION<A,B,C,D,E,F>

Removing a message payload: ⚠️

UNION<A,B,C,D,E> ➜ to UNION<A,B,D,E>

0xC301 + 8 byte fingerprint

Doesn’t specify how to store, fetch, resolve, decode…

⚠️ PCF drops evolution attributes!

Obj1 + metadata

16 byte sync marker

Splittable

Compressable

Appendable

date: on INT (days since 1970)

time-millis: on INT (ms since midnight)

time-micros: on LONG (µs since midnight)

timestamp-millis: on LONG (ms since 0h00 1970 UTC)

timestamp-micros: on LONG (µs since 0h00 1970 UTC)

duration: on FIXED(12) for three little-endian UINT32: months, days, ms

decimal: on FIXED and BYTES, scale, precision

uuid: on STRING

Generic / Specific / Reflect

Code generation

Avro IDL

Avro in the Big Data Ecosystem

Byte Representation Consistency

Multi-language Interoperability

Distributed-Data Friendly: Splittable, Compressable, Appendable

Data (Record) Exchange Format (Serialization)

File Format

IDL / RPC

Most popular serialization format for Streaming Systems

Why Avro excels for this use case?

Efficient format and Schema Evolution

Language-based serialization (e.g. Java, Pickle):

Not consistent, Language specific

JSON: Schemaless

CSV: Verbose, Inconsistent

XML: Too verbose

Protocol Buffers: IDL/RPC oriented (gRPC)

Apache Thrift: Protocol definition format

Flatbuffers: No need to decode in memory

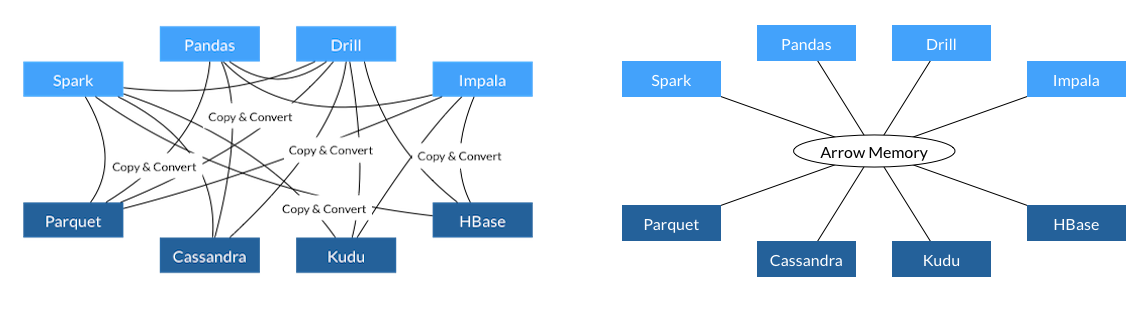

Apache Arrow: Memory layout format designed for language-agnostic interoperability.

Arrow2 (not Apache) can represent Avro records as Arrow records.

Supported by all Big Data Frameworks and (Cloud) Data-Warehouse

JSON, XML: Non-splittable

CSV: Not a standard

Protobuf: Low ecosystem support for the file use case.

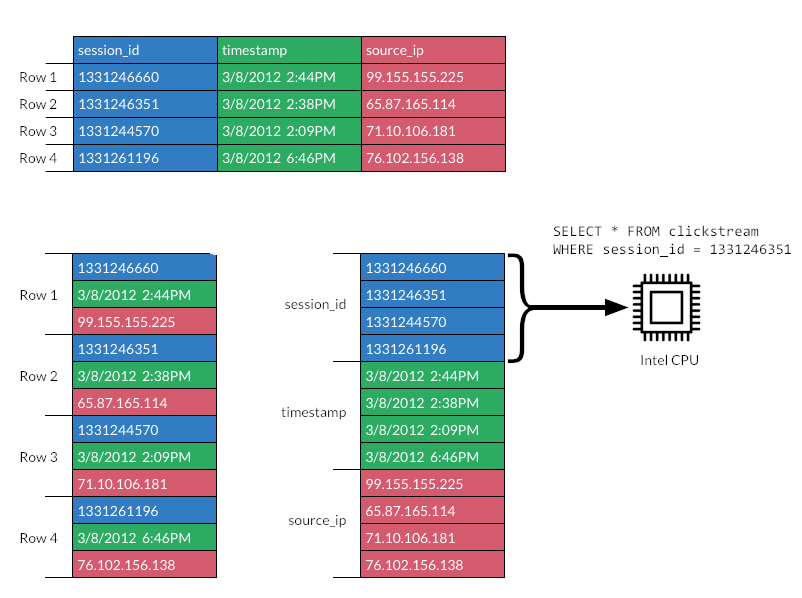

Parquet: Column-based, efficient for Analytics.

Columnar formats are better suited for analytics:

column pruning, vectorization, stats).

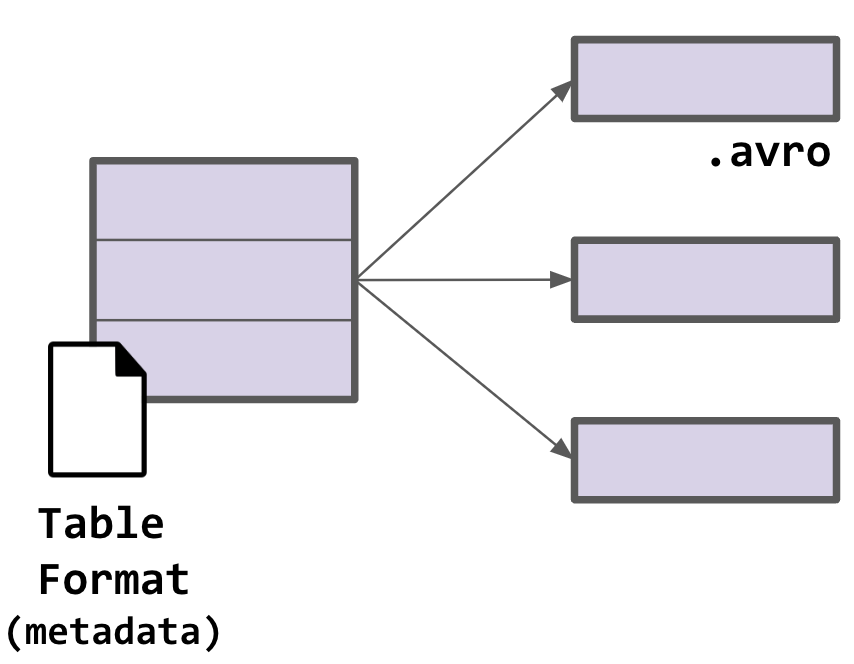

Table formats are metadata to represent all the files that compose a dataset as a “table”.

Avro can be one of those formats (i.e. Iceberg).

Ecosystem Support

Active community

Stable Specification, not broken since 1.3 (12 years!).

Logical Types in 1.8 are backwards/forwards compatible.

Language Support

Apache: C, C++, C#, Java, Javascript, Perl, PHP, Python, Ruby, Rust

Non-Apache: Python (FastAvro), Go, Haskell, Erlang, etc.

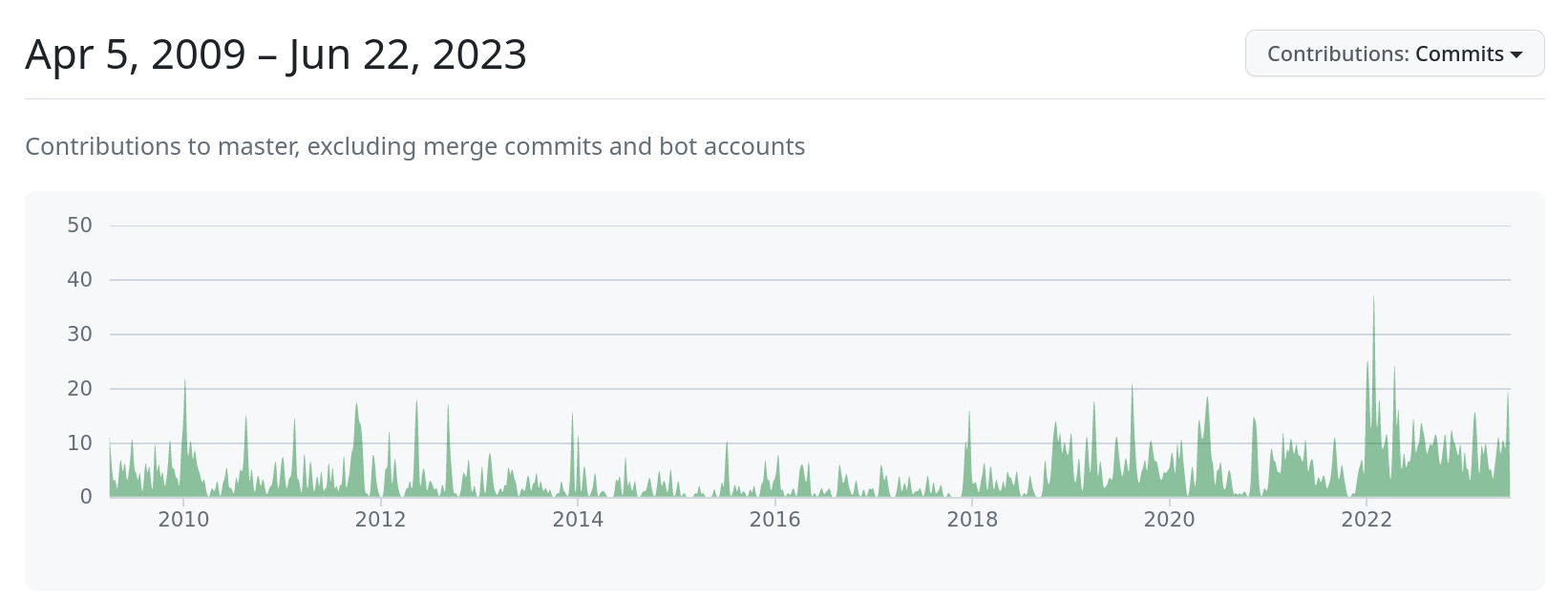

Improved Release Cadence

Improved Contributor Experience:

Github Actions, Docker, Codespaces

New Website

Rust Implementation (donated by Yelp)

Format

Is it worth to break the spec at this point?

Is there room for improvement given the stability constraints?

Implementations

Semantic Versioning. How to do it with so many languages?

Automation specially for the release.

Performance and Interoperability Tests

Improved documentation

No format is perfect for everything, there are different use cases where different formats excel.

Use Avro where it fits.

Join us?

https://avro.apache.org